Xuandong Zhao

E-mail: csxuandongzhao at gmail

Address: Goleta, CA 93106, USA

|

Xuandong Zhao |

|

E-mail: csxuandongzhao at gmail |

|

| GitHub / LinkedIn / Twitter / Google Scholar |

I am currently a Postdoctoral Researcher at UC Berkeley as part of the BAIR and RDI, working with Prof. Dawn Song. I earned my PhD in Computer Science from UC Santa Barbara, where I was advised by Prof. Yu-Xiang Wang and Prof. Lei Li. Prior to that, I graduated with a Bachelor's degree in Computer Science from Zhejiang University. I have also interned at leading tech companies, including Alibaba, Microsoft, and Google.

I work at the intersection of Machine Learning, Natural Language Processing, and AI Safety. My current research focuses on advancing the capability and reliability of frontier AI models and agents. I aim to build increasingly powerful systems through scalable reinforcement learning and self-improvement, while simultaneously ensuring they are safe and aligned with human values. I am always open to collaborations. If you share similar interests or see potential synergies, please feel free to reach out via email!

I am on the job market this year. I would be happy to discuss any opportunities that may be a good fit.

Sep 2025 - Three papers accepted at NeurIPS 2025. See you in San Diego!

Aug 2025 - Two papers accepted at EMNLP 2025.

Aug 2025 - One paper published at Nature Human Behaviour. See here!

Jul 2025 - Two papers accepted at COLM 2025. See you in Montreal!

May 2025 - One paper accepted at ACL 2025.

May 2025 - Three papers accepted at ICML 2025. See you in Vancouver!

Mar 2025 - Watermarking SoK paper accepted at IEEE S&P (Oakland) 2025. See you in San Francisco!

Jan 2025 - Four papers accepted at ICLR 2025.

Jan 2025 - One paper accepted at NAACL 2025.

Dec 2024 - One paper accepted at AAAI 2025. See you in Pennsylvania!

Jun 2024 - Joined UC Berkeley as a Postdoctoral Researcher.

|

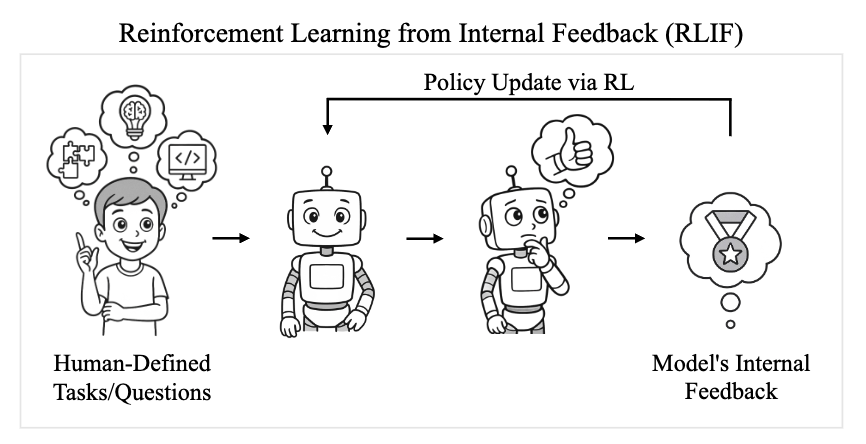

Learning to Reason without External Rewards

[Paper]

[Code]

[HuggingFace]

[Post]

[Media]

Xuandong Zhao*, Zhewei Kang*, Aosong Feng, Sergey Levine, Dawn Song arXiv 2025 |

|

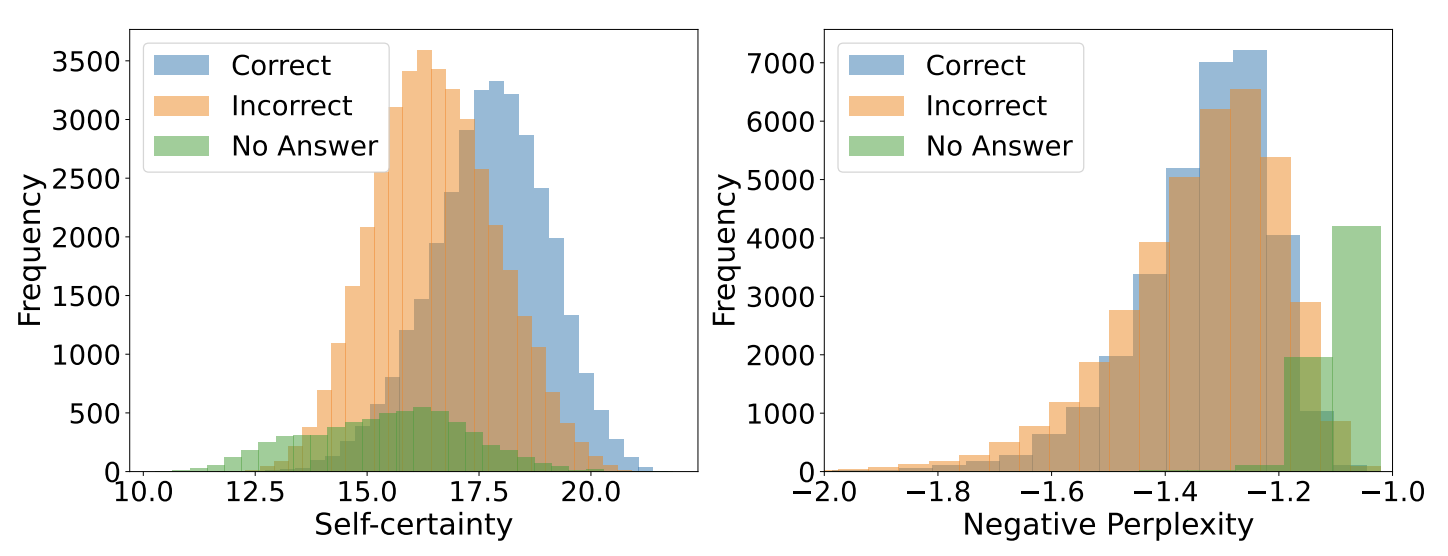

Scalable Best-of-N Selection for Large Language Models via Self-Certainty

[Paper]

[Code]

Zhewei Kang*, Xuandong Zhao*, Dawn Song NeurIPS 2025 |

|

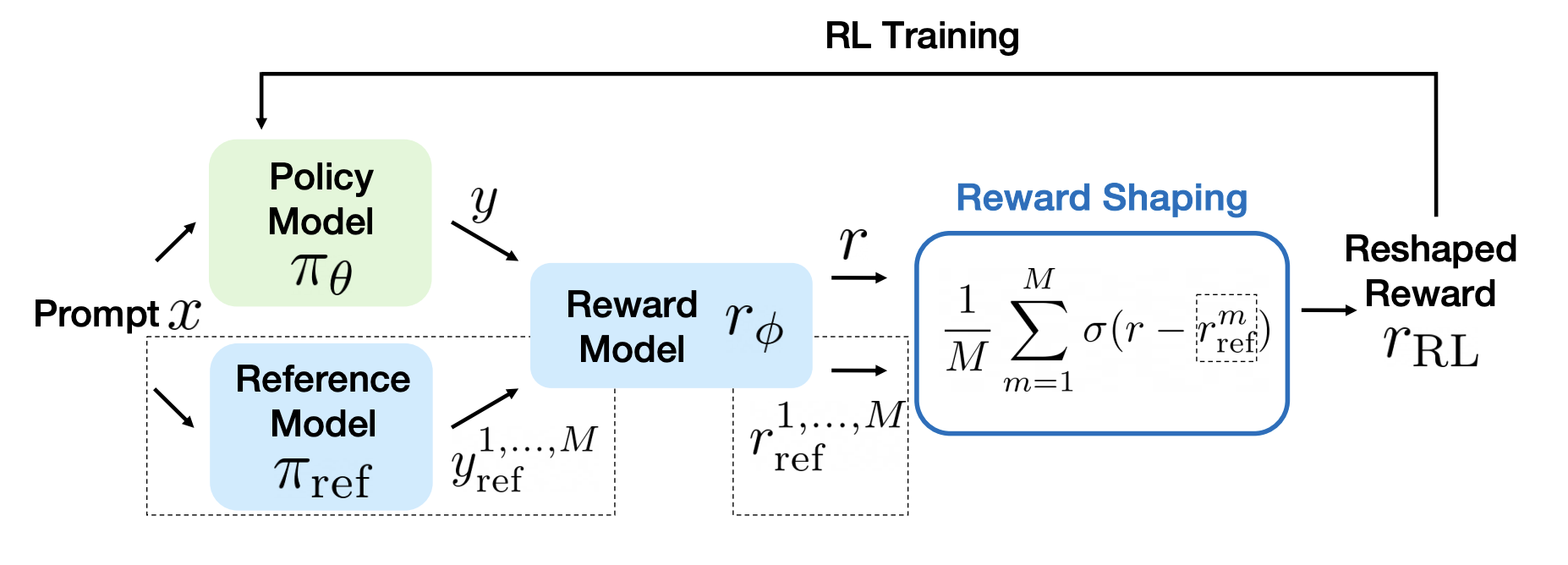

Reward Shaping to Mitigate Reward Hacking in RLHF

[Paper]

[Code]

Jiayi Fu*, Xuandong Zhao*, Chengyuan Yao, Heng Wang, Qi Han, Yanghua Xiao ICML 2025 R2-FM Workshop |

|

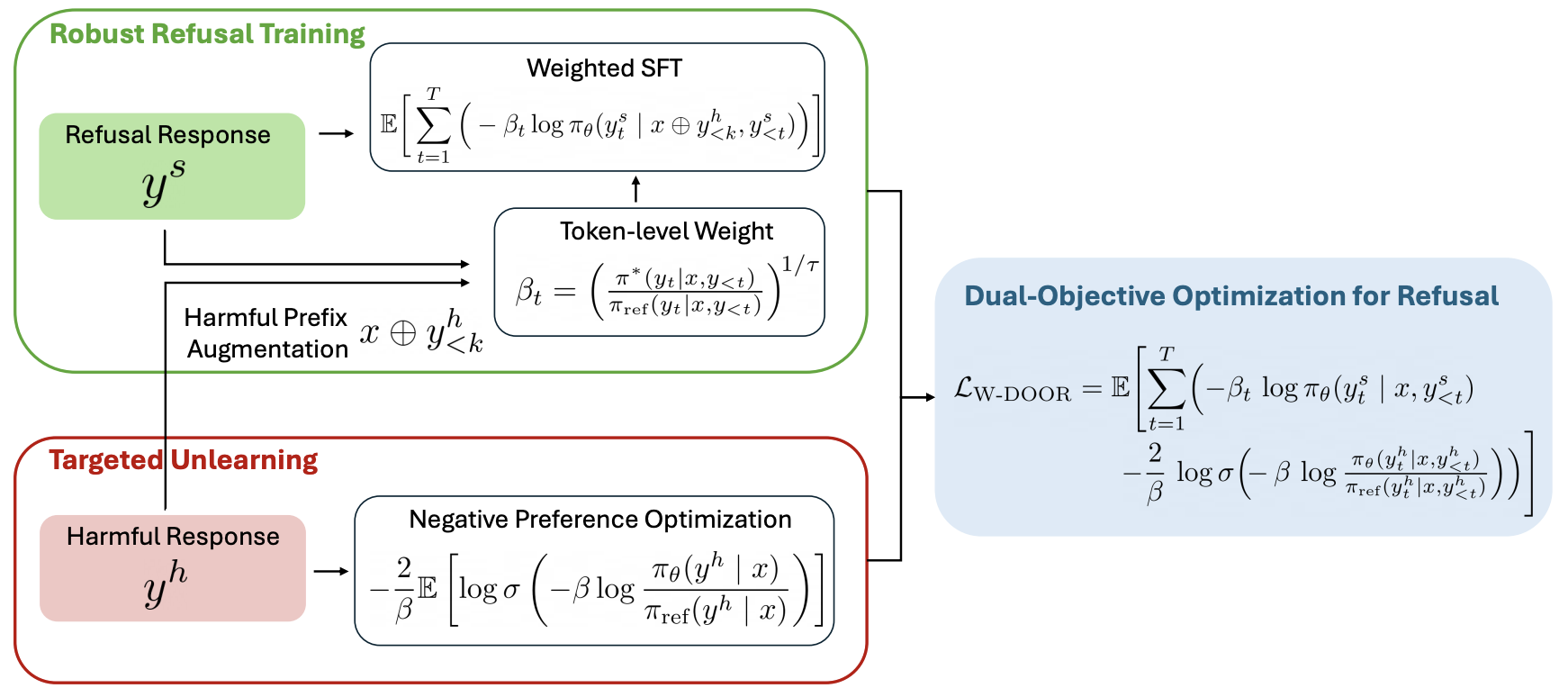

Improving LLM Safety Alignment with Dual-Objective Optimization

[Paper]

[Code]

[HuggingFace]

Xuandong Zhao*, Will Cai*, Tianneng Shi, David Huang, Licong Lin, Song Mei, Dawn Song ICML 2025 |

|

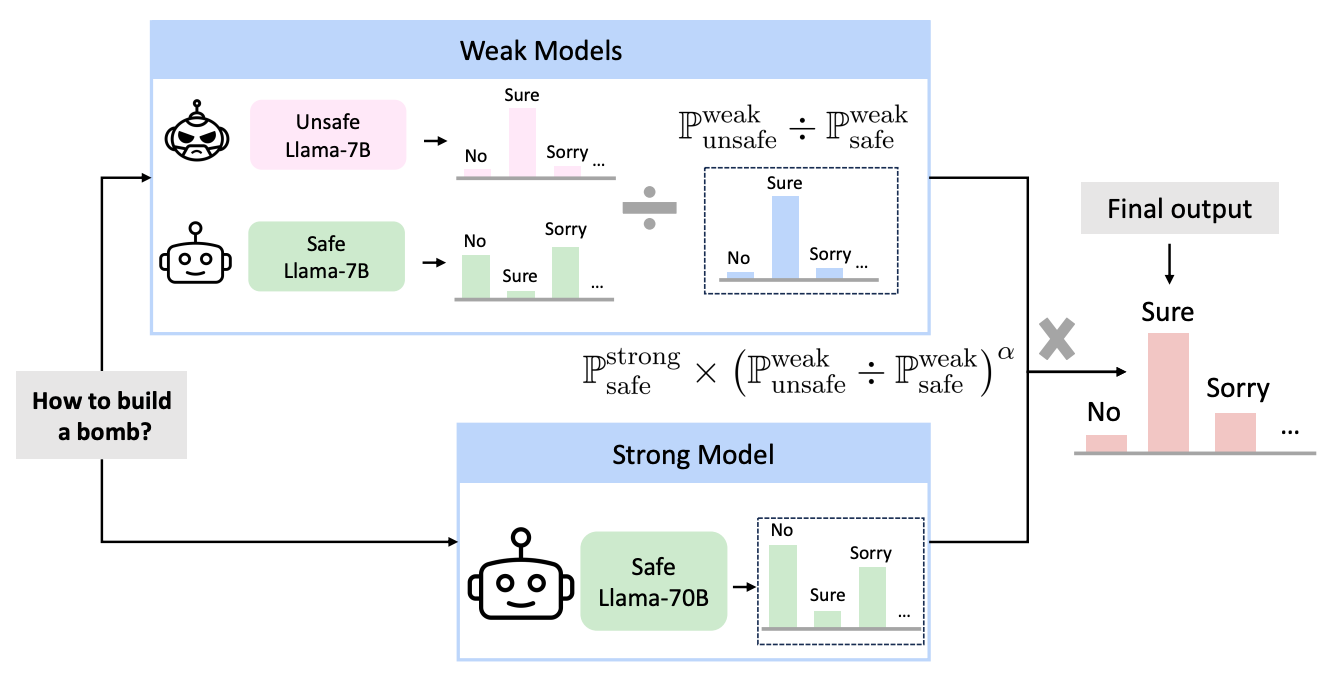

Weak-to-Strong Jailbreaking on Large Language Models

[Paper]

[Code]

[Post]

Xuandong Zhao*, Xianjun Yang*, Tianyu Pang, Chao Du, Lei Li, Yu-Xiang Wang, William Yang Wang ICML 2025 |

|

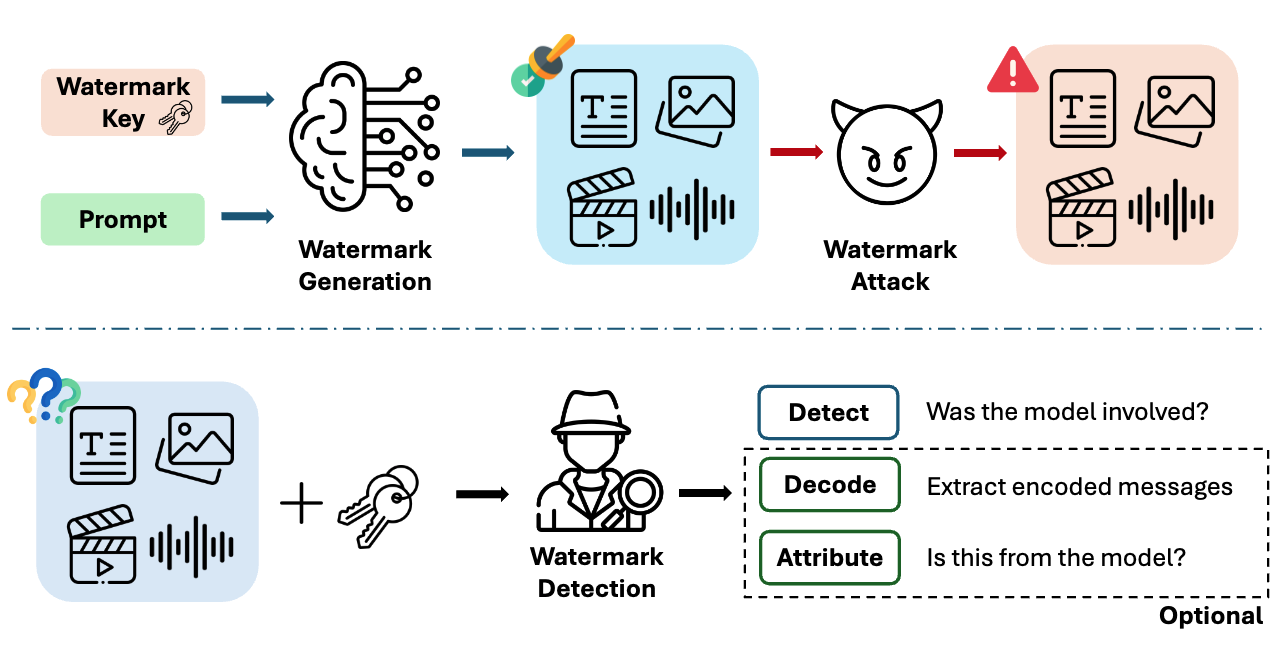

SoK: Watermarking for AI-Generated Content [Paper] Xuandong Zhao, Sam Gunn, Miranda Christ, Jaiden Fairoze, Andres Fabrega, Nicholas Carlini, Sanjam Garg, Sanghyun Hong, Milad Nasr, Florian Tramer, Somesh Jha, Lei Li, Yu-Xiang Wang, Dawn Song IEEE S&P (Oakland) 2025 |

|

An Undetectable Watermark for Generative Image Models

[Paper]

[Code]

[Post]

Sam Gunn*, Xuandong Zhao*, Dawn Song ICLR 2025 |

|

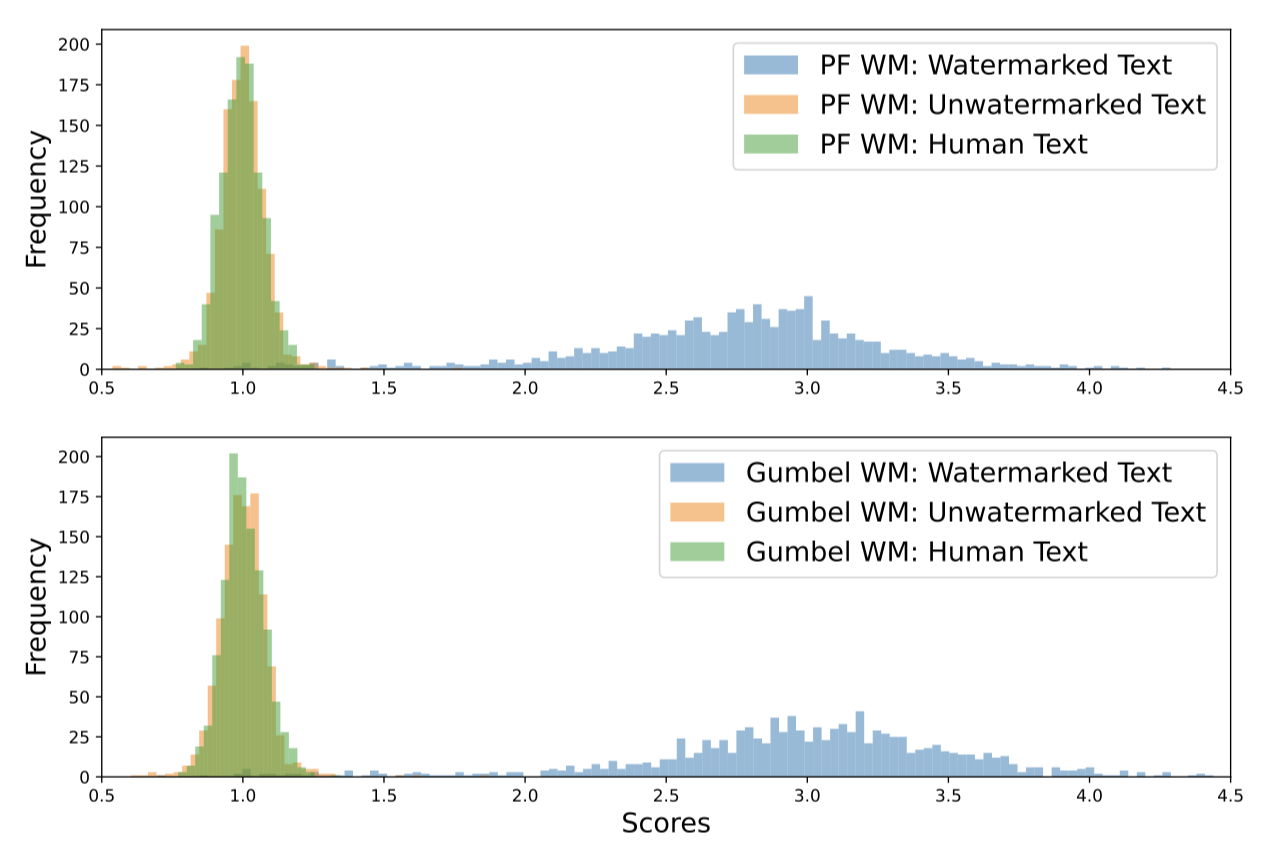

Permute-and-Flip: An Optimally Stable and Watermarkable Decoder for LLMs

[Paper]

[Code]

[Slides]

[Post]

Xuandong Zhao, Lei Li, Yu-Xiang Wang ICLR 2025 |

|

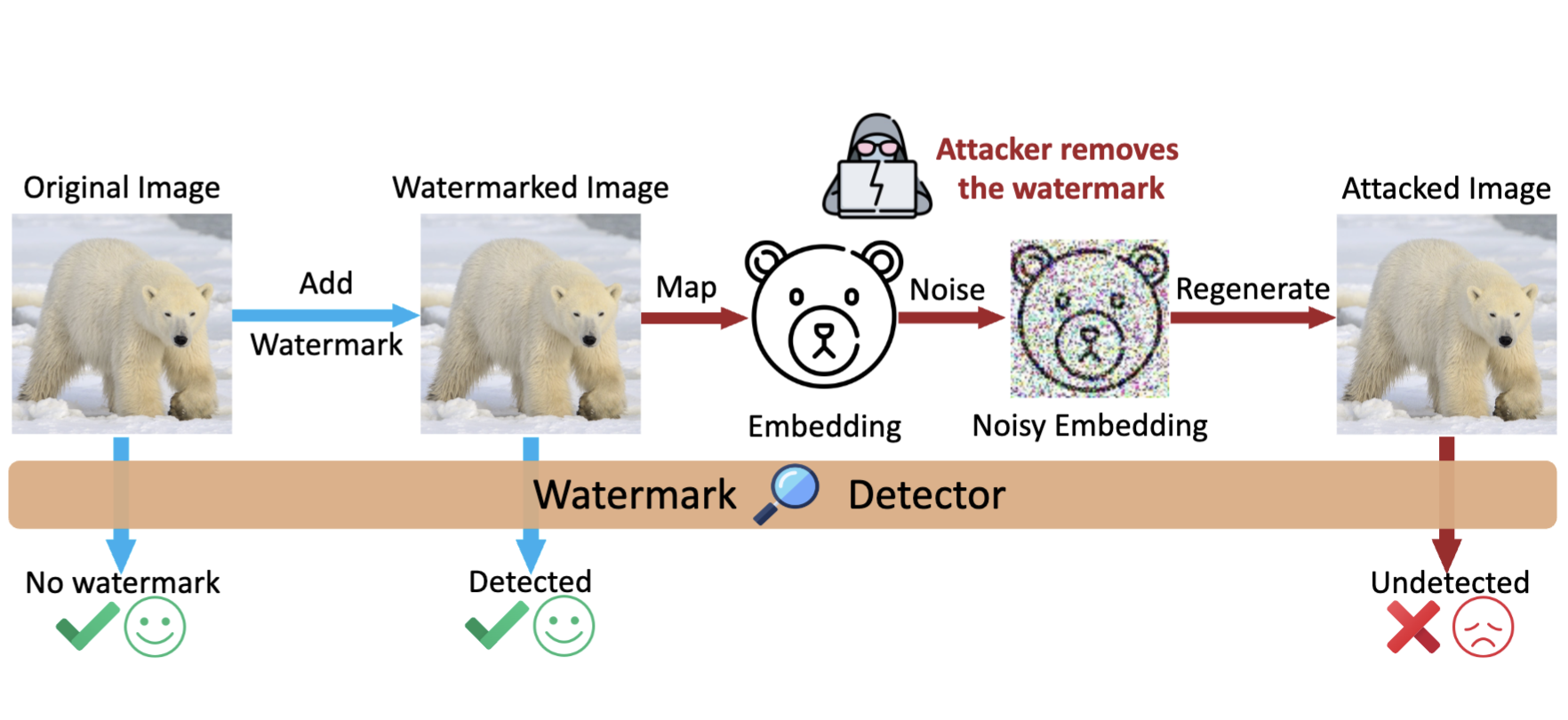

Invisible Image Watermarks Are Provably Removable Using Generative AI

[Paper]

[Code]

[Video]

[Media]

[Post]

Xuandong Zhao*, Kexun Zhang*, Zihao Su, Saastha Vasan, Ilya Grishchenko, Christopher Kruegel, Giovanni Vigna, Yu-Xiang Wang, Lei Li NeurIPS 2024 |

|

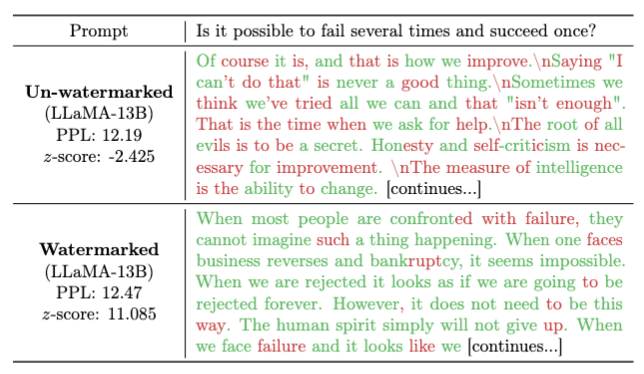

Provable Robust Watermarking for AI-Generated Text

[Paper]

[Code]

[Video]

[Demo]

[Post]

Xuandong Zhao, Prabhanjan Ananth, Lei Li, Yu-Xiang Wang ICLR 2024 |

|

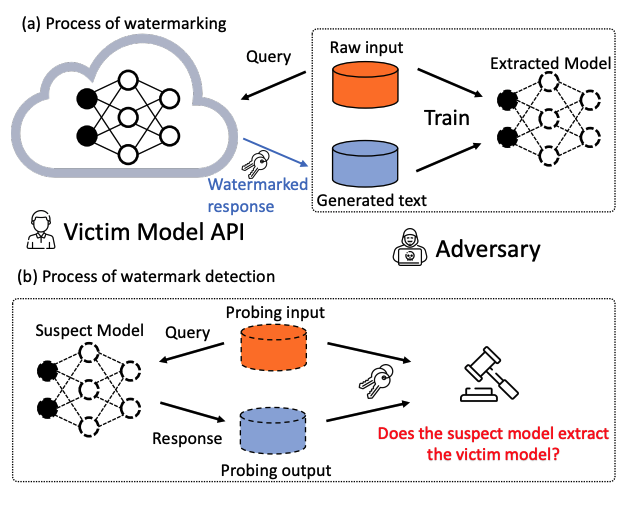

Protecting Language Generation Models via Invisible Watermarking

[Paper]

[Code]

Xuandong Zhao, Yu-Xiang Wang, Lei Li ICML 2023 |

|

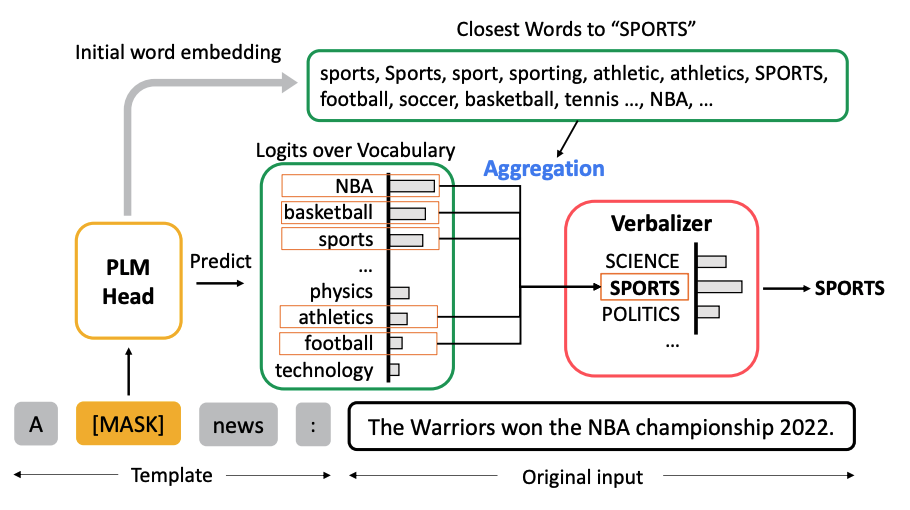

Pre-trained Language Models Can be Fully Zero-Shot Learners

[Paper]

[Code]

[Video]

[Slides]

[Post]

Xuandong Zhao, Siqi Ouyang, Zhiguo Yu, Ming Wu, Lei Li ACL 2023, Oral |

|

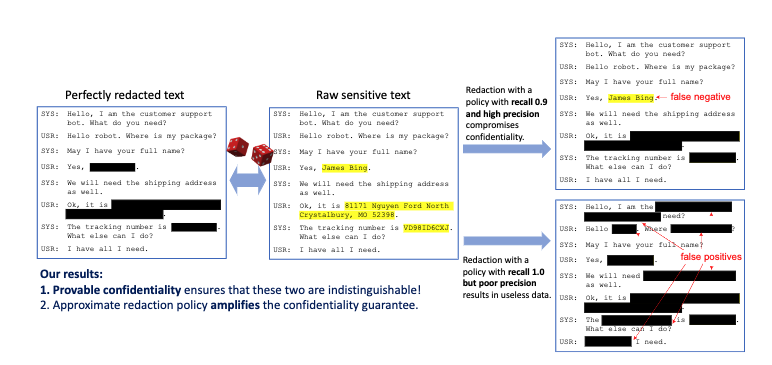

Provably Confidential Language Modelling

[Paper]

[Code]

[Video]

[Post]

Xuandong Zhao, Lei Li, Yu-Xiang Wang NAACL 2022, Oral |

| Position: LLM Watermarking Should Align Stakeholders' Incentives for Practical Adoption

[Paper]

Yepeng Liu, Xuandong Zhao, Dawn Song, Gregory W. Wornell, Yuheng Bu arXiv 2025 |

| Towards a Mechanistic Understanding of Robustness in Finetuned Reasoning Models

[Paper]

Aashiq Muhamed, Xuandong Zhao, Mona T. Diab, Virginia Smith, Dawn Song arXiv 2025; NeurIPS 2025 MechInterp Workshop, Spotlight |

| PromptArmor: Simple yet Effective Prompt Injection Defenses

[Paper]

Tianneng Shi, Kaijie Zhu, Zhun Wang, Yuqi Jia, Will Cai, Weida Liang, Haonan Wang, Hend Alzahrani, Joshua Lu, Kenji Kawaguchi, Basel Alomair, Xuandong Zhao, William Yang Wang, Neil Gong, Wenbo Guo, Dawn Song arXiv 2025 |

| Machine Bullshit: Characterizing the Emergent Disregard for Truth in Large Language Models

[Paper]

[Website]

[Post]

[Media 1, 2]

Kaiqu Liang, Haimin Hu, Xuandong Zhao, Dawn Song, Thomas L. Griffiths, Jaime Fernández Fisac arXiv 2025 |

| The Landscape of Memorization in LLMs: Mechanisms, Measurement, and Mitigation

[Paper]

Alexander Xiong, Xuandong Zhao, Aneesh Pappu, Dawn Song arXiv 2025 |

| AgentSynth: Scalable Task Generation for Generalist Computer-Use Agents

[Paper]

[Code]

[Data]

[Website]

[Post]

Jingxu Xie, Dylan Xu, Xuandong Zhao†, Dawn Song arXiv 2025; NeurIPS 2025 MTI-LLM Workshop, Spotlight |

| Learning to Reason without External Rewards

[Paper]

[Code]

[HuggingFace]

[Post]

[Media]

Xuandong Zhao*, Zhewei Kang*, Aosong Feng, Sergey Levine, Dawn Song arXiv 2025 |

| Invisible Tokens, Visible Bills: The Urgent Need to Audit Hidden Operations in Opaque LLM Services

[Paper]

Guoheng Sun*, Ziyao Wang*, Xuandong Zhao, Bowei Tian, Zheyu Shen, Yexiao He, Jinming Xing, Ang Li arXiv 2025; NeurIPS 2025 ResponsibleFM Workshop, Oral |

| In-Context Watermarks for Large Language Models

[Paper]

Yepeng Liu, Xuandong Zhao, Christopher Kruegel, Dawn Song, Yuheng Bu arXiv 2025 |

| Are You Getting What You Pay For? Auditing Model Substitution in LLM APIs

[Paper]

[Code]

[Post]

Will Cai, Tianneng Shi, Xuandong Zhao†, Dawn Song arXiv 2025 |

| Reward Shaping to Mitigate Reward Hacking in RLHF

[Paper]

[Code]

Jiayi Fu*, Xuandong Zhao*, Chengyuan Yao, Heng Wang, Qi Han, Yanghua Xiao arXiv 2025 |

| Dataset Protection via Watermarked Canaries in Retrieval-Augmented LLMs

[Paper]

Yepeng Liu, Xuandong Zhao, Dawn Song, Yuheng Bu arXiv 2025 |

| Scalable Best-of-N Selection for Large Language Models via Self-Certainty

[Paper]

[Code]

Zhewei Kang*, Xuandong Zhao*, Dawn Song NeurIPS 2025 |

| OVERT: A Benchmark for Over-Refusal Evaluation on Text-to-Image Models

[Paper]

[Code]

[HuggingFace]

Ziheng Cheng*, Yixiao Huang*, Hui Xu, Somayeh Sojoudi, Xuandong Zhao, Dawn Song, Song Mei NeurIPS 2025, Datasets and Benchmarks Track |

| A Technical Report on “Erasing the Invisible”: The 2024 NeurIPS Competition on Stress Testing Image Watermarks

Mucong Ding, Bang An, Tahseen Rabbani, Chenghao Deng, Anirudh Satheesh, Souradip Chakraborty, Mehrdad Saberi, Yuxin Wen, Kyle Rui Sang, Aakriti Agrawal, Xuandong Zhao, Mo Zhou, Mary-Anne Hartley, Lei Li, Yu-Xiang Wang, Vishal M. Patel, Soheil Feizi, Tom Goldstein, Furong Huang NeurIPS 2025, Datasets and Benchmarks Track |

| The Hidden Risks of Large Reasoning Models: A Safety Assessment of R1

[Paper]

Kaiwen Zhou, Chengzhi Liu, Xuandong Zhao, Shreedhar Jangam, Jayanth Srinivasa, Gaowen Liu, Dawn Song, Xin Eric Wang IJCNLP-AACL 2025 |

| SafeKey: Amplifying Aha-Moment Insights for Safety Reasoning

[Paper]

[Website]

[Code]

[HuggingFace]

[Post]

Kaiwen Zhou, Xuandong Zhao, Gaowen Liu, Jayanth Srinivasa, Aosong Feng, Dawn Song, Xin Eric Wang EMNLP 2025 |

| AgentVigil: Generic Black-Box Red-teaming for Indirect Prompt Injection against LLM Agents

[Paper]

Zhun Wang, Vincent Siu, Zhe Ye, Tianneng Shi, Yuzhou Nie, Xuandong Zhao, Chenguang Wang, Wenbo Guo, Dawn Song EMNLP Findings 2025 |

| Quantifying Large Language Model Usage in Scientific Papers

[Paper]

Weixin Liang, Yaohui Zhang, Zhengxuan Wu, Haley Lepp, Wenlong Ji, Xuandong Zhao, Hancheng Cao, Sheng Liu, Siyu He, Zhi Huang, Diyi Yang, Christopher Potts, Christopher D. Manning, James Zou Journal of Nature Human Behaviour 2025 |

| LeakAgent: RL-based Red-teaming Agent for LLM Privacy Leakage

[Paper]

[Code]

Yuzhou Nie, Zhun Wang, Ye Yu, Xian Wu, Xuandong Zhao, Nathaniel D. Bastian, Wenbo Guo, Dawn Song COLM 2025 |

| Assessing Judging Bias in Large Reasoning Models: An Empirical Study

[Paper]

Qian Wang, Zhanzhi Lou, Zhenheng Tang, Nuo Chen, Xuandong Zhao, Wenxuan Zhang, Dawn Song, Bingsheng He COLM 2025 |

| Improving LLM Safety Alignment with Dual-Objective Optimization

[Paper]

[Code]

[HuggingFace]

Xuandong Zhao*, Will Cai*, Tianneng Shi, David Huang, Licong Lin, Song Mei, Dawn Song ICML 2025 |

| Weak-to-Strong Jailbreaking on Large Language Models

[Paper]

[Code]

[Post]

Xuandong Zhao*, Xianjun Yang*, Tianyu Pang, Chao Du, Lei Li, Yu-Xiang Wang, William Yang Wang ICML 2025 |

| DIS-CO: Discovering Copyrighted Content in VLMs Training Data

[Paper]

[Code]

[Website]

[Dataset]

André V. Duarte, Xuandong Zhao, Arlindo L. Oliveira, Lei Li ICML 2025 |

| Efficiently Identifying Watermarked Segments in Mixed-Source Texts

[Paper]

[Code]

Xuandong Zhao*, Chenwen Liao*, Yu-Xiang Wang, Lei Li ACL 2025 |

| SoK: Watermarking for AI-Generated Content

[Paper]

Xuandong Zhao, Sam Gunn, Miranda Christ, Jaiden Fairoze, Andres Fabrega, Nicholas Carlini, Sanjam Garg, Sanghyun Hong, Milad Nasr, Florian Tramer, Somesh Jha, Lei Li, Yu-Xiang Wang, Dawn Song IEEE S&P (Oakland) 2025 |

| An Undetectable Watermark for Generative Image Models

[Paper]

[Code]

[Post]

Sam Gunn*, Xuandong Zhao*, Dawn Song ICLR 2025 |

| Permute-and-Flip: An Optimally Stable and Watermarkable Decoder for LLMs

[Paper]

[Code]

[Slides]

[Post]

Xuandong Zhao, Lei Li, Yu-Xiang Wang ICLR 2025 |

| Multimodal Situational Safety

[Paper]

[Code]

[Website]

[Dataset]

[Post]

Kaiwen Zhou*, Chengzhi Liu*, Xuandong Zhao, Anderson Compalas, Dawn Song, Xin Eric Wang ICLR 2025; NeurIPS 2024 RBFM Workshop, Oral |

| MMDT: Decoding the Trustworthiness and Safety of Multimodal Foundation Models

[Paper]

[Code]

[Website]

Chejian Xu*, Jiawei Zhang*, Zhaorun Chen*, Chulin Xie*, Mintong Kang*, Yujin Potter*, Zhun Wang*, Zhuowen Yuan*, Alexander Xiong, Zidi Xiong, Chenhui Zhang, Lingzhi Yuan, Yi Zeng, Peiyang Xu, Chengquan Guo, Andy Zhou, Jeffrey Ziwei Tan, Xuandong Zhao, Francesco Pinto, Zhen Xiang, Yu Gai, Zinan Lin, Dan Hendrycks, Bo Li†, Dawn Song† ICLR 2025 |

| A Practical Examination of AI-Generated Text Detectors for Large Language Models

[Paper]

[Code]

Brian Tufts, Xuandong Zhao, Lei Li NAACL Findings 2025 |

| CodeHalu: Investigating Code Hallucinations in LLMs via Execution-based Verification

[Paper]

[Code]

Yuchen Tian*, Weixiang Yan*, Qian Yang, Xuandong Zhao, Qian Chen, Wen Wang, Ziyang Luo, Lei Ma, Dawn Song AAAI 2025 |

| Empowering Responsible Use of Large Language Models

[Paper]

Xuandong Zhao PhD Dissertation, 2024 |

| ClinicalLab: Aligning Agents for Multi-Departmental Clinical Diagnostics in the Real World

[Paper]

[Code]

Weixiang Yan, Haitian Liu, Tengxiao Wu, Qian Chen, Wen Wang, Haoyuan Chai, Jiayi Wang, Weishan Zhao, Yixin Zhang, Renjun Zhang, Li Zhu, Xuandong Zhao arXiv 2024 |

| Evaluating Durability: Benchmark Insights into Image and Text Watermarking

[Paper]

[Code]

[Website]

Jielin Qiu*, William Han*, Xuandong Zhao, Shangbang Long, Christos Faloutsos, Lei Li Journal of DMLR 2024 |

| Watermarking for Large Language Model

[Paper]

[Website]

[Video]

Xuandong Zhao, Yu-Xiang Wang, Lei Li Tutorials of NeurIPS 2024, Tutorials of ACL 2024 |

| Bileve: Securing Text Provenance in Large Language Models Against Spoofing with Bi-level Signature

[Paper]

[Code]

Tong Zhou, Xuandong Zhao, Xiaolin Xu, Shaolei Ren NeurIPS 2024 |

| Invisible Image Watermarks Are Provably Removable Using Generative AI

[Paper]

[Code]

[Video]

[Media]

[Post]

Xuandong Zhao*, Kexun Zhang*, Zihao Su, Saastha Vasan, Ilya Grishchenko, Christopher Kruegel, Giovanni Vigna, Yu-Xiang Wang, Lei Li NeurIPS 2024 |

| Erasing the Invisible: A Stress-Test Challenge for Image Watermarks

[Paper]

[Website]

Mucong Ding*, Tahseen Rabbani*, Bang An*, Souradip Chakraborty, Chenghao Deng, Mehrdad Saberi, Yuxin Wen, Xuandong Zhao, Mo Zhou, Anirudh Satheesh, Mary-Anne Hartley, Lei Li, Yu-Xiang Wang, Vishal M. Patel, Soheil Feizi, Tom Goldstein, Furong Huang NeurIPS 2024, Competition Track |

| MarkLLM: An Open-Source Toolkit for LLM Watermarking

[Paper]

[Code]

[Colab]

Leyi Pan, Aiwei Liu, Zhiwei He, Zitian Gao, Xuandong Zhao, Yijian Lu, Binglin Zhou, Shuliang Liu, Xuming Hu, Lijie Wen, Irwin King, Philip S. Yu EMNLP 2024, System Demonstration Track |

| A Survey on Detection of LLMs-Generated Content

[Paper]

[Code]

Xianjun Yang, Liangming Pan, Xuandong Zhao, Haifeng Chen, Linda Petzold, William Yang Wang, Wei Cheng EMNLP Findings 2024 |

| Mapping the Increasing Use of LLMs in Scientific Papers

[Paper]

[Code]

Weixin Liang*, Yaohui Zhang*, Zhengxuan Wu*, Haley Lepp, Wenlong Ji, Xuandong Zhao, Hancheng Cao, Sheng Liu, Siyu He, Zhi Huang, Diyi Yang, Christopher Potts, Christopher D Manning, James Y. Zou COLM 2024 |

| Monitoring AI-Modified Content at Scale: A Case Study on the Impact of ChatGPT on AI Conference Peer Reviews

[Paper]

[Code]

[Post]

Weixin Liang*, Zachary Izzo*, Yaohui Zhang*, Haley Lepp, Hancheng Cao, Xuandong Zhao, Lingjiao Chen, Haotian Ye, Sheng Liu, Zhi Huang, Daniel A. McFarland, James Y. Zou ICML 2024, Oral; Best Presentation Runner-up Award at ICSSI 2024 |

| DE-COP: Detecting Copyrighted Content in Language Models Training Data

[Paper]

[Code]

[Post]

André V. Duarte, Xuandong Zhao, Arlindo L. Oliveira, Lei Li ICML 2024; Best Scientific Paper Award at Portuguese Responsible AI Forum |

| GumbelSoft: Diversified Language Model Watermarking via the GumbelMax-trick

[Paper]

[Code]

Jiayi Fu, Xuandong Zhao, Ruihan Yang, Yuansen Zhang, Jiangjie Chen, Yanghua Xiao ACL 2024 |

| Pride and Prejudice: LLM Amplifies Self-Bias in Self-Refinement

[Paper]

[Code]

[Post]

Wenda Xu, Guanglei Zhu, Xuandong Zhao, Liangming Pan, Lei Li, William Yang Wang ACL 2024, Oral |

| Provable Robust Watermarking for AI-Generated Text

[Paper]

[Code]

[Video]

[Demo]

Xuandong Zhao, Prabhanjan Ananth, Lei Li, Yu-Xiang Wang ICLR 2024 |

| Private Prediction Strikes Back! Private Kernelized Nearest Neighbors with Individual Renyi Filter

[Paper]

[Code]

Yuqing Zhu, Xuandong Zhao, Chuan Guo, Yu-Xiang Wang UAI 2023, Spotlight |

| Protecting Language Generation Models via Invisible Watermarking

[Paper]

[Code]

Xuandong Zhao, Yu-Xiang Wang, Lei Li ICML 2023 |

| Pre-trained Language Models Can be Fully Zero-Shot Learners

[Paper]

[Code]

[Video]

[Slides]

[Post]

Xuandong Zhao, Siqi Ouyang, Zhiguo Yu, Ming Wu, Lei Li ACL 2023, Oral |

| Distillation-Resistant Watermarking for Model Protection in NLP

[Paper]

[Code]

[Video]

[Blog]

[Post]

Xuandong Zhao, Lei Li, Yu-Xiang Wang EMNLP Findings 2022 |

| Provably Confidential Language Modelling

[Paper]

[Code]

[Video]

[Post]

Xuandong Zhao, Lei Li, Yu-Xiang Wang NAACL 2022, Oral |

| Compressing Sentence Representation for Semantic Retrieval via Homomorphic Projective Distillation

[Paper]

[Code]

[Video]

[Poster]

Xuandong Zhao, Zhiguo Yu, Ming Wu, Lei Li ACL Findings 2022 |

| An Optimal Reduction of TV-Denoising to Adaptive Online Learning

[Paper]

[Code]

Dheeraj Baby, Xuandong Zhao, Yu-Xiang Wang AISTATS 2021 |

| A Multi-Semantic Metapath Model for Large Scale Heterogeneous Network Representation Learning

[Paper]

[Code]

Xuandong Zhao, Jinbao Xue, Jin Yu, Xi Li, Hongxia Yang arXiv 2020 |

| Predicting Alzheimer's Disease by Hierarchical Graph Convolution from Positron Emission Tomography Imaging

[Paper]

[Code]

Jiaming Guo*, Wei Qiu*, Xiang Li*, Xuandong Zhao, Ning Guo, Quanzheng Li Big Data 2019 |

| Multi-size Computer-aided Diagnosis of Positron Emission Tomography Images Using Graph Convolutional Networks

[Paper]

[Code]

Xuandong Zhao*, Xiang Li*, Ning Guo, Zhiling Zhou, Xiaxia Meng, Quanzheng Li ISBI 2019 |

|

UC Santa Barbara, USA Ph.D. in Computer Science • Sept. 2019 - June 2024 |

|

Zhejiang University, China B.E. in Computer Science • Sept. 2015 - June 2019, GPA: 3.96/4.00 |

|

Rising Star in AI, KAUST, 2025 Rising Star in Adversarial Machine Learning, AdvML Workshop, 2024 Chancellor's Fellowship, UC Santa Barbara, 2019, 2021, 2023 He Zhijun Scholarship (Highest honor in ZJU CS department), 2019 Alibaba-Zhejiang News Scholarship, 2018 National Scholarship (Top 0.2% Nationwide), 2016 First Prize in Chinese Physics Olympiad (CPhO; Top 0.1% in Shanxi Province, China), 2014 |

Last update: Dec 2025